I like to think there are some solid foundations to my life. I will be able to do the Monday New York Times crossword and I will not be able to do the Thursday version. My dental hygienist will not be satisfied with the amount of flossing I do. I will get my favorite spaghetti meal served on my birthday.

And I can trust American Scientist to write articles that teach me science in an accessible, yet challenging, way. American Scientist is the magazine of the honor society Sigma Xi and is my favorite science magazine since the demise of The Sciences, my all-time favorite science magazine. Or it was.

That particular pillar of my existence crumbled into dust today as I was reading an otherwise interesting article on “Paradoxes, Contradictions, and the Limits of Science” by Noson Yanofsky. In this article, there is a sidebar on the Traveling Salesman Problem that makes me weep.

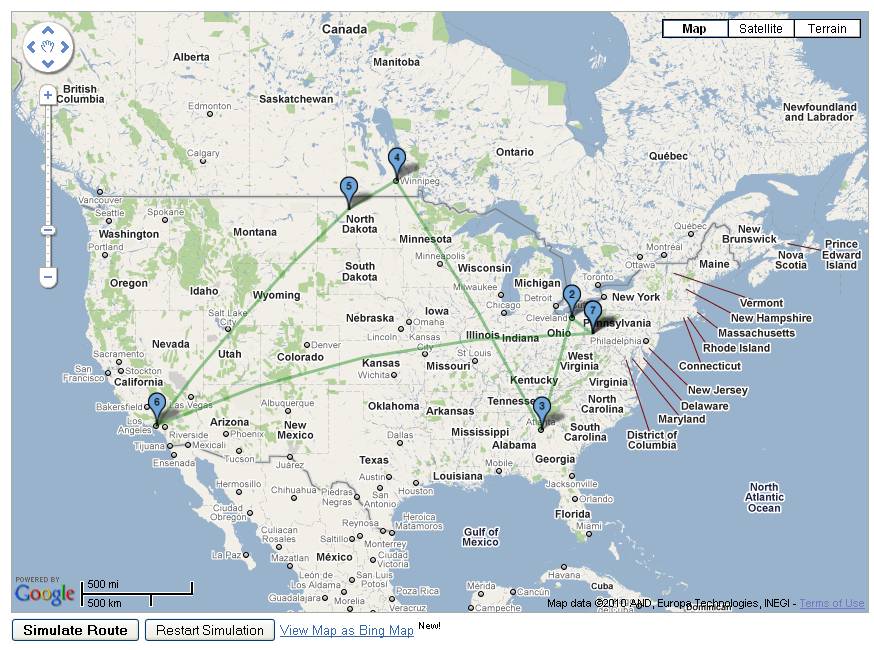

It is fortunate that they have put this behind their firewall so as to minimize the damage they have done. But let me briefly describe: there is a map of the United States, along with a truly atrocious Traveling Salesman tour through the US, apparently visiting each zip code (approximately 43,000 zip codes). The tour is created by “Hilbert Curves” and is “about 75% optimal”. Then, the inevitable, “Finding the actual shortest route would take a computer essentially an infinite amount of time to compute”. Cue the sidebar: “But what about going to 100 different cities? A computer would have to check 100x99x98x….x2x1 possible routes.” They then helpfully expand that into the 157 digit number of potential routes. “The computer would have see how long the route takes, and then compare all of them to find the shortest route.”

The sidebar continues with the drivel of computer speed and the number of centuries it would take.

No, no. 100! times no. It is not necessary to check all the routes. 100 cities TSPs can be solved to proved optimality in a very short period of time. And even 43,000 zip codes can be solved to optimality with a non-infinite (and quite practical) amount of computation. Using integer programming and specialized techniques, Bill Cook and his gang at Concorde have solved to optimality problems with up to 85,900 cities. The “zip code” problem is just like the problems Cook has solved, just smaller.

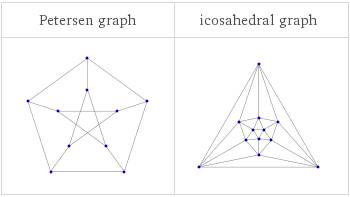

The Traveling Salesman problem is NP-complete (or NP-hard depending what you mean by the TSP). But as a computational approach, the “figuring out how big 100! is and that is the time” completely misunderstands the role optimization and algorithms play in truly solving hard problems (I mean it: the true optimal is found and proved to be optimal). My most recent blog post (sadly too long ago) was on this very subject. Perhaps I should rename this blog “Michael Trick’s Rants about Complete Enumeration Arguments”.

Even if you want to call this instance impossible to solve, why would you ever use a Hilbert Curve heuristic for it? Don’t get me wrong: I am a student of John Bartholdi and his paper with Loren Platzman on spacefilling curves and his paper applying this to Meals-on-Wheels scheduling changed my life. But these techniques don’t give great tours (they have lots of other great properties).

It appears American Scientist has taken this tour from this webpage. The published map is the bottom one on the page. The resulting tour is clearly not good. Practically any other modern heuristic would do better. This is no complaint about Robert Kosara, the author of the original article: the page describes what he wanted to do, and he did it well. But for American Scientist to put this forward as an example of the state-of-the-art heuristic approach completely misrepresents the state of the art for heuristic approaches. And I have no idea what 75% optimal means: the tour is longer than optimal, of course.

I don’t know if this means I should not trust American Scientist in areas that are not my specialty. On something I know a bit about, the magazine has failed miserably.

(*) Our favorite recipe so far has been

(*) Our favorite recipe so far has been (*)

(*)