Wolfram|Alpha is an interesting service. It is not a search engine per se. If you ask it “What is Operations Research” it draws a blank (*) (mimicking most of the world) and if you ask it “Who is Michael Trick” it returns information on two movies “Michael” and “Trick” (*). But if you give it a date (say, April 15, 1960), it will return all sorts of information about the date:

Time difference from today (Friday, July 31, 2009): 49 years 3 months 15 days ago 2572 weeks ago 18 004 days ago 49.29 years ago 106th day 15th week Observances for April 15, 1960 (United States): Good Friday (religious day) Orthodox Good Friday (religious day) Notable events for April 15, 1960: Birth of Dodi al-Fayed (businessperson) (1955): 5th anniversary Birth of Josiane Balasko (actor) (1950): 10th anniversary Birth of Charles Fried (government) (1935): 25th anniversary Daylight information for April 15, 1960 in Pittsburgh, Pennsylvania: sunrise | 5:41 am EST\nsunset | 6:59 pm EST\nduration of daylight | 13 hours 18 minutes Phase of the Moon: waning gibbous moon (*)

(Somehow it missed me in the famous birthdays: I guess their database is still incomplete)

It even does simple optimization

min {5 x^2+3 x+12} = 231/20 at x = -3/10 (*)

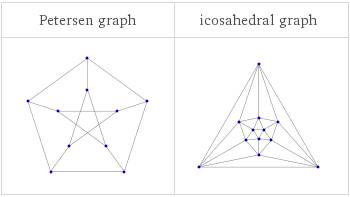

And, in discrete mathematics, it does wonderful things like generate numbers (permutations, combinations, and much more) and even put out a few graphs:

(*)

(*)

This is all great stuff.

And it is all owned by Wolfram who define how you can use it. As Groklaw points out, the Wolfram Terms of Service are pretty clear:

If you make results from Wolfram|Alpha available to anyone else, or incorporate those results into your own documents or presentations, you must include attribution indicating that the results and/or the presentation of the results came from Wolfram|Alpha. Some Wolfram|Alpha results include copyright statements or attributions linking the results to us or to third-party data providers, and you may not remove or obscure those attributions or copyright statements. Whenever possible, such attribution should take the form of a link to Wolfram|Alpha, either to the front page of the website or, better yet, to the specific query that generated the results you used. (This is also the most useful form of attribution for your readers, and they will appreciate your using links whenever possible.)

And if you are not academic or not-for-profit, don’t think of using Wolfram|Alpha as a calculator to check your addition (“Hmmm… is 23+47 really equal 70? Let me check with Wolfram|Alpha before I put this in my report”), at least not without some extra paperwork:

If you want to use copyrighted results returned by Wolfram|Alpha in a commercial or for-profit publication we will usually be happy to grant you a low- or no-cost license to do so.

“Why yes it is. I better get filling out that license request! No wait, maybe addition isn’t a ‘copyrighted result’. Maybe I better run this by legal.”

Groklaw has an interesting comparison to Google:

Google, in contrast, has no Terms of Use on its main page. You have to dig to find it at all, but here it is, and basically it says you agree you won’t violate any laws. You don’t have to credit Google for your search results. Again, this isn’t a criticism of Wolfram|Alpha, as they have every right to do whatever they wish. I’m highlighting it, though, because I just wouldn’t have expected to have to provide attribution, being so used to Google. And I’m highlighting it, because you probably don’t all read Terms of Use.

So if you use Wolfram|Alpha, be prepared to pepper your work with citations (I have done so, though the link on the Wolfram page says that the suggested citation style is “coming soon”: I hope I did it right and they do not get all lawyered up) and perhaps be prepared to fill out some licensing forms. And it might be a good idea to read some of those “Terms of Service”.

——————————————–

(*) Results Computed by Wolfram Mathematica.

Christos Papadimitriou of UC Berkeley was the

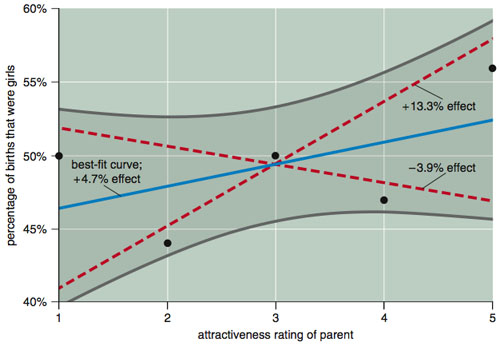

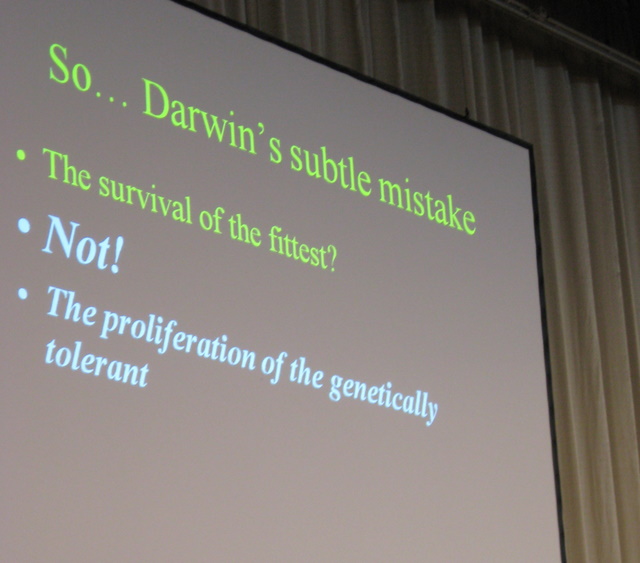

Christos Papadimitriou of UC Berkeley was the  Christos’ fundamental point is that perhaps selection under recombination does not maximize fitness. Instead, it favors “mixability” (or genetic tolerance). And this mixability accelerates speciation, and accelerates evolution. There is a paper in the Proceedings of the National Academy of Science that explains this all in more detail.

Christos’ fundamental point is that perhaps selection under recombination does not maximize fitness. Instead, it favors “mixability” (or genetic tolerance). And this mixability accelerates speciation, and accelerates evolution. There is a paper in the Proceedings of the National Academy of Science that explains this all in more detail.