Montreal, Canada

Happy Birthday to the Blog

This blog is now two years old. One year ago, I wrote

I’ve had 92 posts (93 counting this one) in that year, which seems like a reasonable number.I’ve had about 8000 visitors to the blog, which seems pretty good. Not up with the big boys (sites like BoingBoing) with millions of visitors, but ahead of lots of sites. The visitors have provided some commentary activity (69 comments, about half from me). I only have eight other blogs linking to me (ranking the blog 316,042 at Technorati) so I need to get the blogrolling thing going better.

It is amazing the amount of spam comments that are generated. My system has protection against such comments (Akismet) and it has caught 2,205 (!) spam comments.

My posting frequency is down a little bit with 83 posts (I’d like to get it up to a consistent twice per week) ; comments are up to 123, again half from me; 20 blogs point to me putting my Technorati ranking down to 415,686. Average visitors per month has gone from 1000 to 2000. The big increase: I received 38,000 (!) spam comments. Unbelievable!

The best thing: we are starting to see more blogs on OR. So check out the blogroll and spread the word!

Student finds small Turing machine

I wrote previously about a competition Stephen Wolfram (of Mathematica fame) had to show that a particularly small Turing Machine (the “2,3 Turing Machine”) is universal. Sure enough it is, as shown by a University of Birmingham (UK) student, Alex Smith, as reported in Nature. This machine, as shown in a diagram from Wolfram’s blog on the prize has just 2 states and 3 colors. It is certainly surprising that such a simple structure can compute anything any computer can!

I wrote previously about a competition Stephen Wolfram (of Mathematica fame) had to show that a particularly small Turing Machine (the “2,3 Turing Machine”) is universal. Sure enough it is, as shown by a University of Birmingham (UK) student, Alex Smith, as reported in Nature. This machine, as shown in a diagram from Wolfram’s blog on the prize has just 2 states and 3 colors. It is certainly surprising that such a simple structure can compute anything any computer can!

I had hoped that operations research might be of help in this, but that does not seem to be the case.

Smith learned about Wolfram’s challenge in an Internet chat room and almost immediately went to work fiddling with the machine. After learning its behaviour, he set about proving that it was computationally equivalent to another type of simple, conceptual computer known as a tag system.

Mathematicians have already shown that tag systems can compute any problem, so proving the two were equivalent effectively proved the power of Wolfram’s machine. Smith’s proof is 44 pages long.

My (what is the word for “person who makes me jealous”? Hmmm… I’ll make up a word: “Jealous Idol”) jidol, Scott Aaronson managed to tear himself away from supermodels to provide a quote (albeit of a “raining on a parade” type):

The solution isn’t hugely relevant to modern computer science, says Scott Aaronson, a computer scientist at the Massachusetts Institute of Technology (MIT) in Cambridge, Massachusetts. “Most theoretical computer scientists don’t particularly care about finding the smallest universal Turing machines,” he wrote in an e-mail. “They see it as a recreational pursuit that interested people in the 60s and 70s but is now sort of ‘retro’.”

Thanks to Kathryn Cramer for pointing out the result of this competition. She also has a very nice posting in her blog on visualizing the effect of the Federal Reserve rate cut, relevant to my previous posting on visualization.

Visual Display of Operations Research Data/Algorithms/Solutions

When I was a doctoral student (20 years ago!), my adviser John Bartholdi introduced me to Edward Tufte’s Visual Display of Quantitative Information, perhaps hoping that my dissertation would be a model of appealing, provocative display. I am afraid I disappointed him: just like every other doctoral student, my dissertation contained great vomits of tables containing every piece of data I had generated over the previous years. I still find the topic fascinating, and I still find Minard’s graph of the fortunes of Napoleon’s army in the Russian campaign of 1812 an inspiring sight.

When I was a doctoral student (20 years ago!), my adviser John Bartholdi introduced me to Edward Tufte’s Visual Display of Quantitative Information, perhaps hoping that my dissertation would be a model of appealing, provocative display. I am afraid I disappointed him: just like every other doctoral student, my dissertation contained great vomits of tables containing every piece of data I had generated over the previous years. I still find the topic fascinating, and I still find Minard’s graph of the fortunes of Napoleon’s army in the Russian campaign of 1812 an inspiring sight.

Most operations research has pretty terrible presentation. Partially this is because of the high-dimensions in which we work. Only rarely can we work in 2 or 3 dimensions and present comprehensible results. Once in a whle I see something inspiring. One recent work I saw was the EURO Gold Medal presentation of Aharon Ben-Tal. Part of the presentation involved showing how nonlinear optimization algorithms affected the design of a bridge span. The display of the span made things perfectly clear. And the visual display of moats for the traveling salesman problem is a very effective way of getting across the definition and validity of the lower bounds.

Taking some sites from outside of our field for inspiration, let me offer JunkCharts (thanks to Kaiser for providing a comment which led me to the site) and Strange Maps. JunkCharts looks at a variety of displays of numbers, offering critiques about misrepresentations and other biases. Strange Maps matches with my own interest in antique maps, but concentrates on unusual maps, often representing some other data in its geographic layout.

We could use more such creativity in operations research: have you seen something particularly effective?

Better Models or Better Data

In Greg Mankiw’s economics blog, he excerpts from a review of Alan Greenspan’s new book:

Michael Kinsley reviews Alan Greenspan’s book (which I have not yet read). This passage from the review caught my eye:

You gotta love a guy whose idea of an important life lesson is: “I have always argued that an up-to-date set of the most detailed estimates for the latest available quarter is far more useful for forecasting accuracy than a more sophisticated model structure.” Words to live by.

Mike thinks Alan is being hopelessly geeky here. But I think Alan is talking to geeks like me, and also those who used to work for him on the staff of the Federal Reserve.

…

Better monetary policy, he suggests, is more likely to follow from better data than from better models. Relatively little modern macro has been directed at improving data sources. Perhaps that is a mistake.

This issue of data versus model is important in operations research. I must say that in my own consulting, I am rather cavalier about data quality. I was on a project with the United States Postal Service where it was surprising how iffy much of the data was (for instance, we had to estimate (not measure) the amount of mail that was sent between LA and NY each year). My view was that the qualitative conclusions were robust to reasonable variations in the data. So if the model suggested that the number of mail processing facilities could be cut by 25%, this was true no matter what the exact data was. Further, the solutions we generated were going to be pretty good, no matter what the true data was: the day-to-day operation could handle any mis-estimates that were in our approach.

I was not persuasive in this: a tremendous amount of time and effort was spent in getting better data. At the end, this was wise, since the results stand up much better to detailed scrutiny. No group faced with the closure of a facility wants to hear: it really doesn’t matter what the data is, since Trick says so.

I do wonder how many OR projects actually end up with poor or wrong answers due to not getting data of high enough quality. But it is a heck of a lot more fun to develop models rather than hunt down missing data.

Playing “Pass the Kidney”

Kidneys are unusual organs. We are given two, though we can get by with one. So, unlike the heart, say, we each have a “spare” that we can donate to others. There are some altruistic sorts who will donate a kidney to a stranger, but, for most of us, we need a really good reason before we undergo the surgery to remove a kidney. That reason is typically the need of a loved one for a replacement kidney. But what to do if the transplant won’t work due to blood type or other medical incompatibilities? Well, if my kidney won’t work for my brother, then perhaps it will work for stranger A, who has a sister whose kidney does work for my brother. We can then swap: I give a kidney to A, whose sister gives a kidney to my brother. Such is the logic behind kidney swapping. The Wall Street Journal of October 15, 2007 has an article on these swaps (thanks to Tallys at the University of Miami for pointing this out to me!). Clearly they are important aspect of kidney transplants:

Some experts estimate that eventually there could be as many as 4,000 kidney exchanges a year. That would be a big addition to the 6,400 kidney transplants performed last year involving living donors. An additional 10,600 transplants involved kidneys from deceased donors. Waits for organs from deceased donors can run to five years or more.

But there is an operations research question:

As interest in kidney swaps grows, logistical, medical and ethical questions are emerging. One of the fundamental issues: Who should get first dibs on a match? A donor with blood type O, for instance, can give to patients of any blood type and might match with hundreds of pairs. One combination might allow for two transplants; another, for 10, because it allows other matches to occur, in a kind of domino effect.

This is an interesting problem, though even more interesting when one considers the objective. Do you aim for cycles of length 10, since it allows for lots of transplants? Or do you go with shorter cycles, since they are less likely to be complicated by individual issues? In fact, the rest of the article is about a particular kidney patient and her experiences in trying to arrange for a swap. There were all sorts of issues related to the quality of the kidney she was to receive, the geographic location of the donors, the health of other recipients and so on. The group she ended up with had 3 donors and 3 recipients: a longer cycle would have increased these issues.

A key issue in this area is the population used to find the paths. The network’s currently are ad-hoc, based, it appears, on individual surgeons and hospitals.

Complicating the picture: transplant surgeons, some of whom are highly competitive and focused on their own programs rather than joining networks with other hospitals. “When big egos come together, sparks fly,” says Bill Bry, surgical director of kidney transplantation at California Pacific Medical Center in San Francisco. An Ohio paired-donation network recently split into two when surgeons quarreled.

I suspect that surgeons don’t truly understand that cutting a population in half doesn’t merely cut the probability of a cycle in half. The probability of pairwise exchanges (two donor/recipient pairs) goes down to one-quarter: I am sure some combinatorialist knows the effect on the probability of longer cycles, but I suspect it decreases further.

The term operations research is not mentioned, but the techniques clearly are OR methods. In fact, I get a shout-out, though not by name

In the early kidney swaps, surgeons matched pairs using a pencil and paper or by moving magnetic pieces around on a board. Today, computer experts, working with economists, are developing programs to “optimize” matching, to enable the greatest number of transplants. They’re employing mathematical techniques used for major-league baseball schedules, airline departures and online driving directions.

Perhaps we should rename the field of operations research (OR) to be “the techniques that create baseball schedules” (TCBS). But I think helping facilitate kidney transplants is a little more important than making sure the Yankees get enough summer weekends at home.

UPDATE (Oct 17). Pascal van Hentenryck has alerted me to a CMU connection to this story:

PITTSBURGH—Computer scientists at Carnegie Mellon University have developed a new computerized method for matching living kidney donors with kidney disease patients that can increase the number of kidney transplants—and save lives.

This step-by-step method, or algorithm, could significantly boost the efficiency of kidney exchanges, a mechanism for matching live donors with unrelated recipients. Kidney exchanges are now considered the best chance for boosting the number of kidney transplants in the United States. More than 70,000 Americans are on the waiting list for kidney transplants and about 4,000 die waiting each year.

The matching algorithm makes it possible to create matches for three- and four-way exchanges—that is, three or four donors matched to three or four recipients—as well as two-way exchanges. It is the first that is scalable so it can be used for a national pool of donors and recipients, said Tuomas Sandholm, professor of computer science.

The matching algorithm makes it possible to create matches for three- and four-way exchanges—that is, three or four donors matched to three or four recipients—as well as two-way exchanges. It is the first that is scalable so it can be used for a national pool of donors and recipients, said Tuomas Sandholm, professor of computer science.

A paper detailing the algorithm, developed by Sandholm, Computer Science Professor Avrim Blum and graduate assistant David J. Abraham, will be presented Friday at the Association for Computing Machinery’s Conference on Electronic Commerce in San Diego.

Sports Scheduling and Rubik’s Cube

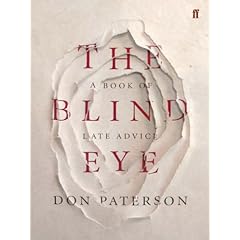

The October 6, 2007 edition of The Spectator (an English weekly with a right-of-center, Olde England bias but wonderful cartoons, chess column, and commentary once the bias is accounted for) contains a “review” of a book The Blind Eye: A Book of Late Advice by the poet Don Paterson (I don’t think the book is available in the US). The review is actually just a collection of 30 or so extracts from the book. The review alone left me chuckling all morning. Some quotes:

The October 6, 2007 edition of The Spectator (an English weekly with a right-of-center, Olde England bias but wonderful cartoons, chess column, and commentary once the bias is accounted for) contains a “review” of a book The Blind Eye: A Book of Late Advice by the poet Don Paterson (I don’t think the book is available in the US). The review is actually just a collection of 30 or so extracts from the book. The review alone left me chuckling all morning. Some quotes:

I can see exactly what not to do at the moment. No doubt through the usual process of elimination, I’ll arrive at my favourite strategy of total paralysis.

I enjoyed L.’s creeping senility. I could have him repeat my favorite stories as often as I wanted, sometimes several times in the space of the same afternoon. X’s sudden lurch into his anecdotage, on the other hand, was a disaster: until then, his shyness had prevented our discovering what a bore he was.

and

You’ve made a blog … clever boy! Next: flushing.

One really hit home for me:

A poem with one line wrong is like a Rubik’s Cube with one square wrong: what it is precisely not is one move away from completion.

I have been looking for an intuitive explanation to various sports leagues on why a schedule that is perfect except for one flaw is likely a long way from a perfect schedule. I will proceed to rip off this analogy and pretend it is my own. I admit that comparing a schedule to Rubik’s cube is not as clever as comparing a poem to the cube: I wonder if I should compare a schedule to a poem?

My Jealousy Knows No Bounds (Quantum or Otherwise)

I’ll say it straight out: I am really jealous of Scott Aaronson, author of the Shtetl-Optimized blog. First, he has an audience that provides hundreds of comments to his blog entries and visibility sufficient to be picked up by MIT Technology Review, albeit with mixed results. Second, he writes at length in an interesting and provocative way. For me, it took four times before I could even spell provocative. Third, he has broad interests and insights that belie his age (he received his doctorate in 2004 and I suspect did so before the normal time). Fourth, … well, I’ll stop there, since it is only depressing me further.

Except now he has gone and topped everything! He is being plagiarized by Australian fashion models! I don’t think I have every written anything that would sound anything but goofy coming from the lips of a fashion model, but there he is providing dialog that actually sounds kinda … smart. With his luck, he’ll sue for millions, win and get the chance to date the models.

Death of Lloyd Clarke

Lloyd Clarke of ILOG, who most of us in the linear/integer programming community knew, was killed last week in a bicycling accident. From the ILOG announcement:

Lloyd Clarke of ILOG, who most of us in the linear/integer programming community knew, was killed last week in a bicycling accident. From the ILOG announcement:

Lloyd was an active member of the INFORMS community, serving on the advisory council for several INFORMS Practice meetings, and as the industry liaison and practitioner program chair for several INFORMS general meetings. He was known among his friends and colleagues for his kindness, his devotion to both work and family, his sense of humor, his seemingly boundless enthusiasm for whatever task he undertook, and his infectious sense of optimism. In addition to enjoying photography, Lloyd was an avid bicyclist, typically biking more than 150 miles per week. The one small comfort we can take is that he died doing something he loved.

ILOG has put together a fund in his memory. His local paper has a short article on Lloyd and the accident.

I have a son a little younger than Lloyd’s daughter: stories like this remind me to give him a hug every day before I leave.

Update November 10. Be sure to read the comments for a response to the news reports about the fault of the accident.

Getting cheaper boats and ships

I am in Providence attending this year’s Constraint Programming conference. I’m on the executive and we have developed new bylaws, so instead of listening to all the talks, I am involved in discussions on the minutia of quorums and transition rules. Still, there is some time for the conference itself.

I just saw a terrific talk by Matt Ginsberg of the University of Oregon and On Time Systems, a firm specializing in scheduling optimization. One of the big things they do is shipyard optimization, and he described putting together a system for better scheduling the production of navy submarines (which are boats) and carriers (which are ships). While traditional scheduling involves trying to minimize the amount of time it takes to build something, this sort of scheduling is about minimizing costs (or so he thought). So his firm put together a nice system to minimize the cost of going through the tens of thousands of tasks that go into a boat or ship. If that was the end of the talk, it would have been a fine technical talk on an interesting topic. But he went further.

I just saw a terrific talk by Matt Ginsberg of the University of Oregon and On Time Systems, a firm specializing in scheduling optimization. One of the big things they do is shipyard optimization, and he described putting together a system for better scheduling the production of navy submarines (which are boats) and carriers (which are ships). While traditional scheduling involves trying to minimize the amount of time it takes to build something, this sort of scheduling is about minimizing costs (or so he thought). So his firm put together a nice system to minimize the cost of going through the tens of thousands of tasks that go into a boat or ship. If that was the end of the talk, it would have been a fine technical talk on an interesting topic. But he went further.

“Just because a schedule looks good doesn’t mean it is good” is a phrase I wish more people would use. Lots of schedules look good, but when you try to use them they fail for easy-to-see-in-retrospect reasons. One example of this is the highly optimized crew schedules used by airlines. Sure, they look good and they appear to save money, but they are fragile: disruptions can spread throughout the system. Matt brought up two important issues not covered by the “minimize cost” aspects of their schedules. The first is the brittleness seen in airline crew schedules: will delays in one task mess up more? The second is union buy-in. If the goal is to decrease costs, won’t unions naturally be against it?

The brittleness point is important. Indeed, a minimum cost schedule is brittle (though perhaps no more brittle than the schedule currently in place), though it saves about 10.4% on costs. But the methods Matt used can be adapted to make float (the amount any task can be delayed without affecting other tasks) into account. A task with too little float (perhaps just a few work shifts) is at risk of delaying the whole project. But you can combine these objectives: minimize cost and minimize number of tight tasks. It turns out that you can decrease the number of tight tasks by more than 90% (relative to the current schedule) and still save about 10.3% on costs (numbers approximate: I have to learn to take better notes!). So decreasing brittleness comes almost for free!

As for the unions, one fact about shipyards is that they often layoff and then rehire lots and lots of workers. The schedules Matt provides are much smoother in the way they use workers. Certainly far more workers would have consistent jobs under the schedules, and layoffs would not occur near as often.

Overall, the shipbuilders would save money, the unionized workers would have more consistent jobs, and the building process would be less susceptible to delays. What’s not to like?

Well, the firms building the ships are paid by the Navy on a cost-plus basis. The higher the cost, the higher the plus. So they have essentially no interest in saving our money. They like high costs! Next time you see your congressman or -woman, ask “What happened to the ARGOS project to improve ship-building operations? Wasn’t that supposed to save us a few billion dollars?”

While we wait for the companies to work more efficiently, On Time Systems has a scheduling game you can play.