Sadly, I’m not at INFORMS Practice, but the blog entries and tweets make me feel like I am there (or perhaps they remind me I am not). Lots of interesting things happening and the conference hasn’t even started yet! Coming up shortly: the technology workshops, followed by the Welcome Reception tonight. I’ve got the tweets in my sidebar, and highly recommend following the conference page for the blog entries.

Category: Conferences

Nurse, Nurse! Where’s my Nurse?

I am a sucker for competitions. The people at PATAT (a conference series Practice and Theory of Automated Timetabling; I was co-chair for their 2004 conference, and a member of their steering committee for a few years) do a really good job at timetabling competitions. I really liked their competition for the 2008 conference on course and exam timetabling. I had some thoughts of using logical Benders’ to solve one of the problems but didn’t quite get my act together for it. But I liked the problems and I liked the organization.

The next competition has just begin, though it is on an accelerated schedule. The topic is nurse rostering and ends June 1, 2010. This is an important practical problem:

Building good rosters for nurses in hospitals has received ample attention in recent years and is recognised as a difficult combinatorial optimisation problem with practical relevance. In hospitals much effort is spent producing solutions which are workable and of a high quality.

It is a nicely designed competition, but be aware that you can’t use commercial codes. So those of us who use CPLEX/XPRESS/Gurobi as a linear or integer programming base are probably out of luck:

Rule 5

Participants have to implement an algorithm to tackle the problem on a single processor machine; they can use any programming language. The use of third-party software is allowed under the following restrictions:

- it is free software

- it’s behaviour is (reasonably) documented

- it runs under a commonly-used operating system (Linux or Windows)

Working with COIN-OR is probably legal, under a broad definition of “reasonably documented”. But it is interesting that Mac operating systems are not deemed “commonly-used”.

Despite these caveats, I do recommend the competition, and perhaps will see you in Belfast!

INFORMS Practice Tutorials

The INFORMS Practice meeting coming up in Orlando has an extremely impressive set of methodology tutorials planned. Here is the list:

360i

M. Kevin Geraghty, MS, Vice President, Research & Analytics, on “Marketing in Online Social Spaces.”Business Forecast Systems, Inc.

Eric A. Stellwagen, BA, CEO & Co-Founder, on “Beyond Time Series Forecasting: Improving Forecasting Accuracy by Modeling the Impact of Promotions, Business Interruptions and Other Aperiodic Events.”Chevron Corporation

Franklyn G. Koch, MS, Decision Analysis Practice Leader, Chevron Projects Resources Company, on “How Game Theory Yields New Insights to Decision Analysis in the Energy Industry.”Federal Energy Regulatory Commission

Richard O’Neill, PhD, Chief Economic Advisor, on “Better, Smarter Electricity Markets: How Better Optimization Software Can Save Billions.”Forio Business Simulations

Michael Bean, MS, President, on “How to Create Web-Based Simulations and Interactive Data Visualizations.”.Georgia Institute of Technology

Ellis L. Johnson, PhD, Professor & Coca-Cola Chair; Industrial & Systems Engineering, on “A Framework for Choosing Optimization Software.”Hewlett-Packard Corporation

Pramod Singh, PhD, Analytics Solution Architect, on “Marketing Campaign Optimization Is Hard (Especially in the Future).”IBM Research

Robin Lougee-Heimer, PhD, Research Staff Member; Program Manager, COIN-O.R., on “Using Open-Source Solvers in Prime-Time Applications.”Innovative Decisions, Inc.

Donald L. Buckshaw, MS, Senior Principal Analyst, on “The Balance Beam Process for Prioritization and Weight Elicitation.”Intechné

Sanjay Saigal, PhD, President, on “Fueled by Randomness: Practical Probability Management.”Intel Corporation

Erik F. Hertzler, MBA, Capital Supply Chain Staff Engineer, TME Capital Planning Group, on “Using Option Contracts with Suppliers to Reduce Risk and Build Win-Win Relationships.”SAS Institute Inc.

Michael Gilliland, MS, MSE, Product Marketing Manager, Forecasting, on “Why are Forecasts So Wrong? What Management Must Know About Forecasting.”Schneider National, Inc. & Princeton University

Ted L. Gifford, MS, Distinguished Member of Technical Staff and Hugo Simao, PhD, Senior Operations Research Engineer, on “Approximate Dynamic Programming Captures Fleet Operations for Schneider National.”University of Cincinnati, College of Business

Jeffrey D. Camm, PhD, Professor and Head; Quantitative Analysis & Operations Management, on “The Art of Modeling.”Xerium Technologies, Inc. & Vanguard Software Corporation

David Bryant, Vice President, Operations and Brian Lewis, PhD, Vice President, Professional Services, on “Global Optimization for Complex Production Scheduling Decisions.”Veritec Solutions

Warren H. Lieberman, PhD, President, on “Effective Pricing.”

A few points: it is surprising how many tutorials are being given by non-academics: it will be fantastic to get a real-world view on these issues. Second, I am very impressed with the range of topics being discussed. Third, I would really like to see about 2/3 of these, but know that I will only have time for 2 or 3 (since Monday is fully scheduled for me for the Edelman competition). This is going to be frustrating! I think I will volunteer to do the conference scheduling in order to maximize the number of tutorials I can see.

If you are interested in this conference, note that the super saver registration deadline is March 1.

What Panels would you Like to See?

The organizers at this Fall’s INFORMS Meeting (theme of the conference: “Willie, Lance, and Optimizing the Music Scene in Austin”) have asked me to organize a series of panel discussions (or other “not four papers, each of 22.5 minutes” form) on topics of interest. These panels should not be on technical topics but rather on issues of professional interest. What would make for a good panel? Here are a few possibilties:

- Blogging, Twitter, and Facebook: Role for Operations Researchers (of course!)

- Editors Panel: How to be a successful author, referee, and editor

- Funding Agencies: How and why to get funding

- The Academic/Industry Interface: How Industry can Support Academia and vice versa

- Role of Operations Research in Business Schools

- Role of Operations Research in Undergraduate Education

- Department Heads Panel: The Future of Industrial Engineering Departments

- Dean’s Panel: Operations Research as a Path to Academic Leadership

What would you like to see? Do any of the above particularly resonate? What would you add? Other than a panel discussion (or four 22.5 minute talks, and please hold all questions until the next conference), what would be an interesting format to present some of this?

If you have some suggestions of possible panel organizers or members, please feel free to email me those personally.

Algorithmic Voting Theory, Venice, and a Talk on Old/New Papers

I just got back from Venice, where I attended a conference on Algorithmic Decision Theory. This is a new conference series (optimistically numbered the first conference, implying at least a second) revolving around issues in uncertainty in decision making, preference solicitation, learning and other issues. From the conference web page:

A new unique event aiming to put together researchers and practitioners coming from different fields such as Decision Theory, Discrete Mathematics, Theoretical Computer Science and Artificial Intelligence in order to improve decision support in the presence of massive data bases, combinatorial structures, partial and/or uncertain information and distributed, possibly inter-operating decision makers. Such problems arise in several real-world decision making problems such as humanitarian logistics, epidemiology, risk assessment and management, e-government, electronic commerce, and the implementation of recommender systems.

This area has been very active, particularly in computer science where there are a host of applications.

I was asked to give one of the invited talks, and spoke on “An Operations Research Look at Voting”. I was a little worried about giving this talk, since my main qualifications come from papers published twenty years ago. When I was a doctoral student at Georgia Tech, I worked with John Bartholdi and Craig Tovey on computational issues in voting. Being the first to look at those issues, we got to prove some of the basic results in the area. These include

- For some natural voting rules, it is NP-hard to determine who the winner is.

- For some natural voting rules, it is NP-hard to determine how to manipulate the rule (where manipulation means misrepresenting your preferences so as to get a preferred outcome).

- For some natural voting rules, optimally using the powers of a chair to form subcommittees or otherwise manipulate the voting process is NP-hard.

We published this work in Social Choice and Welfare (after getting it rejected from more mainstream economics journals) where … it was soundly ignored for 15 years. No one referred to the papers; no one followed up on the papers; no one cared about the papers at all!

This work was my job talk in 1987/88, and it got me a wonderful job here at the Tepper School (then GSIA), Carnegie Mellon. And, without Google Scholar, it was not obvious that the papers were being ignored, so they added to my vita, and made it a bit easier to pass through the various steps.

But I did like the work a lot, and regretted (and still regret) that my economist colleagues did not take computational limits more seriously in their models.

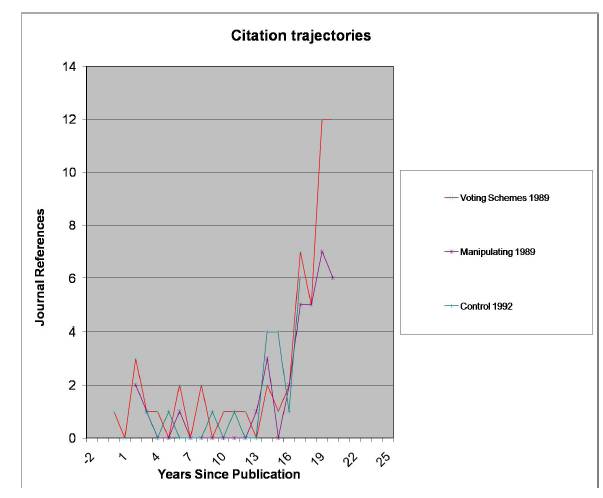

But then something amazing happened about 5 years ago: people started referring to the papers! The references were mainly in computer science, but at least the papers were being recognized. The counts of these papers in the Web of Science (formerly Science Citation Index) are particularly striking. In the associated graph, the x-axis is years since publication; the y-axis is the number of references in Web of Science in that year (Google scholar numbers are higher of course, but there is a bias towards more recent papers there). In my talk, I compare that graph to my “normal” papers, which reach a peak after 4 or 5 years then decrease. It is really gratifying to see the interest in these papers along with all the really cool new results people are getting.

But then something amazing happened about 5 years ago: people started referring to the papers! The references were mainly in computer science, but at least the papers were being recognized. The counts of these papers in the Web of Science (formerly Science Citation Index) are particularly striking. In the associated graph, the x-axis is years since publication; the y-axis is the number of references in Web of Science in that year (Google scholar numbers are higher of course, but there is a bias towards more recent papers there). In my talk, I compare that graph to my “normal” papers, which reach a peak after 4 or 5 years then decrease. It is really gratifying to see the interest in these papers along with all the really cool new results people are getting.

I closed off the talk with some work I have done recently on creating voting trees. Suppose there are three candidates, “a”, “b”, and “c”, and you really like candidate “a”. Many voting systems proceed as a series of comparisons between two alternatives (think of standard parliamentary procedure). If you are the chairperson, you might try to bring the candidates forward so as to increase the chances of “a” winning. In fact, if you set the agenda to be “b” against “c” and the winner against “a”, then “a” will win as long as “a” beats someone (and no one beats everyone). In this problem, the goal is to do the manipulation without knowing other voters’ preferences.

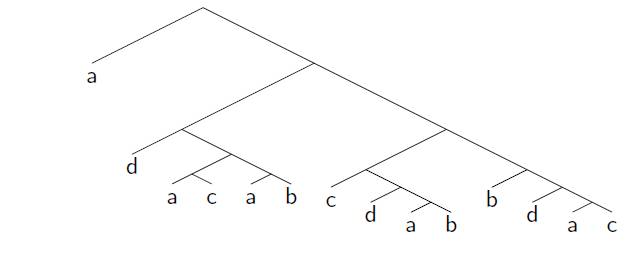

Can you do this for four candidates? If you want “a” to win, “a” must be in the top cycle: the group of candidates (perhaps the entire set of candidates) who all beat all the other candidates. The “Condorcet winner” is the minimal top cycle: if some candidate beats all the other candidates one-on-one, then that candidate must win any voting tree it occurs in. So, assuming “a” is in the top cycle, can you create a voting tree so that “a” wins with four candidates? The answer is yes, but it is a bit complicated: first “a” goes against “c” with the winner against “d” then the winner against “b” who plays the winner of (“a” goes against “b” with the winner against “d” …) …. actually, the minimum tree has 14 leaves! I am biased, but I think the tree is beautiful, but it goes to show how hard it is to manipulate agendas without knowledge of others’ preferences. I am in the process of generating the trees on 4 candidates: there is a very natural rule (“Copeland winner with Copeland loser tie break”: see the presentation for definitions) that requires more than 32 leaves (if an implementation tree for it exists).

Can you do this for four candidates? If you want “a” to win, “a” must be in the top cycle: the group of candidates (perhaps the entire set of candidates) who all beat all the other candidates. The “Condorcet winner” is the minimal top cycle: if some candidate beats all the other candidates one-on-one, then that candidate must win any voting tree it occurs in. So, assuming “a” is in the top cycle, can you create a voting tree so that “a” wins with four candidates? The answer is yes, but it is a bit complicated: first “a” goes against “c” with the winner against “d” then the winner against “b” who plays the winner of (“a” goes against “b” with the winner against “d” …) …. actually, the minimum tree has 14 leaves! I am biased, but I think the tree is beautiful, but it goes to show how hard it is to manipulate agendas without knowledge of others’ preferences. I am in the process of generating the trees on 4 candidates: there is a very natural rule (“Copeland winner with Copeland loser tie break”: see the presentation for definitions) that requires more than 32 leaves (if an implementation tree for it exists).

Sanjay Srivastava and I made a conjecture almost 15 years ago that would imply that this sort of manipulation would be possible no matter how many candidates. Little progress has been made but I think it is still a great problem (the economists know this as implementation by backwards induction and characterizing rules implementable on trees is an important problem in social choice/mechanism design).

If you want more details on all this, here are my slides. The references are

- Small Binary Voting Trees M.A. Trick, Small Binary Voting Trees, First International Workshop on Computational Social Choice,

Amsterdam, Netherlands, 500-511 (2006). - Sophisticated Voting Rules S. Srivastava and M.A. Trick, Sophisticated voting rules: The two tournament case, Social Choice and

Welfare, 13: 275-289 (1996).

- How hard is it to control an election?, with C. A. Tovey and M. A. Trick. A slightly revised version of this appeared in Mathl. Comput. Modelling (Special Issue on Formal Theories of Politics) 16(8/9):27-40 (1992).

- The computational difficulty of manipulating an election, J.J. Bartholdi, C. A. Tovey and M. A. Trick (1989); Social Choice and Welfare 6:227-241 (1989).

- Voting schemes for which it can be difficult to tell who won the election, J.J. Bartholdi, C. A. Tovey and M. A. Trick; Social Choice and Welfare 6:157-165 (1989).

Oh, and Venice is a beautiful place to visit. But you might have heard that elsewhere.

Modeling as a Teachable Skill

New post on the INFORMS Blog on a panel discussion I attended on how to teach modeling:

I just attended a nice “panel discussion” on Teaching the Art of Modeling, put together by Jim Orlin (MIT), Stephen Powell and Rob Shumsky (both from Dartmouth). This was not your normal INFORMS session! The panelists decided to do this as an “active learning” session, so audience members had to work throughout the session. The first exercise was to think about how to model a hypothetical, but real-sounding problem: “Suppose the Red Cross was considering paying people for their blood donations. How would you provide them with a model that could help them understand the tradeoffs.” That (paraphrased) was all the information we got. We were then given 10 minutes or so to work individually on addressing this. The idea would be that this would be the first 10 minutes of whatever multiple-hour process we would go through to get a “real” model. Where would you start?

For many, the starting point was brainstorming: putting down a whole set of issues to be considered and items that might go into the model. For others, it was graphing some of the anticipated relationships between key issues. Others still used techniques such as influence diagrams to help organize their thoughts. Be a hard-core mathematical programming, I thought in terms of objectives, variables and constraints, and was pretty far along with my nonlinear, nonconvex mixed integer program when time was called.

Stephen Powell then asked some audience members what they did, eliciting the strategies given above. He has experimented with this problem with students and learned a number of things about what they do (presumably either inexperienced or novice at modeling). First, even for students who have taken modeling courses, it is surprising how little of what we teach gets used in this context. Students, when faced with a fuzzy modeling problem, often do some combination of the following:

- They grab on to data like a lifeboat, prompty multiplying or dividing every number in sight in the hope of getting the “right answer” the professor is looking for. The Red Cross example has no numbers, so they might make some up just to get going.

- They dispense with modeling and go straight to the answer: “This is a bad idea because …”

- They adopt inefficient methods and are unable to step back and recognize how inefficient they have become.

- They overuse brainstorming relative to any aspect of structured problem solving that they might have been taught.

If there is a column of numbers, you can bet that many students will immediately run a regression!

After discussing these results (there are a couple papers in the Journal of the Operational Research Society on “How Novices Formulate Models” that covers this), Jim and Rob were given a problem new to them (on a model for deciding on the best morgtage to get) and they showed how an influence diagram approach would be used to begin understanding and modeling.

Powell and his co-author Robert Batt have a book entitled Modeling for Insight (Wiley: one of the exhibitors here) .

It was great to see a session that required the audience to do some work! While I was not completely convinced by the modeling approach presented (give me my objective, variables, and constraints!), I was convinced about active learning as a way to make 90 minutes go by much faster and in a much more effective way.

Moving on to San Diego (both my blog and I)

I’ll be guest blogging at the INFORMS Conference in San Diego, so I’ll be posting over there for the next few days. There are 12 guest bloggers, so the conference should get some pretty good coverage. I’ve got a news feed on my main page sidebar trying to track the blog, twitter feed, hash tags, and so on.

I’ll be guest blogging at the INFORMS Conference in San Diego, so I’ll be posting over there for the next few days. There are 12 guest bloggers, so the conference should get some pretty good coverage. I’ve got a news feed on my main page sidebar trying to track the blog, twitter feed, hash tags, and so on.

I have put in my first post on the extra preconference steps needed on the social network side:

When I started attending INFORMS (actually ORSA/TIMS) meetings in the 80’s, the preconference steps were clear: pack, find the (paper) airline tickets, print out my talk onto transparencies, go to conference, wander around wondering what was going on. There are few extra steps now:

- Follow @informs09 on twitter

- Check out the #informs09 tag on twitter, and remember to use it on own posts.

- Sign up for the daily e-news to stay up to date on conference news and activities.

- Subscribe to the INFORMS 09 blog (or remember to check back a lot!) to follow the official news and the twelve (!) guest bloggers.

- Join the Conference Linkedin Group (122 members and counting).

- Pack

- Find memory stick with talk

- Print out boarding passes

- Go to sunny San Diego

The extra steps are worth it. No more wandering around wondering what is happening! See you soon!

Not at ISMP

The International Symposium on Mathematical Programming of the Mathematical Programming Society occurs every three years, and I generally like to attend them. They are like INFORMS conferences in size, but have many more talks (and people!) that I want to see. This year’s ISMP is being held next week in Chicago. Unfortunately, I won’t be there: I will be in Cork Ireland attending an advisory board meeting of the Cork Constraint Computation Center.

If anyone is blogging from ISMP, please let me know so I can be sure to point to your entries.

Note added August 26. Check out the comments for pointers to Nathan Brixius and Tallys Yunes who are blogging the conference.

IFORS Distinguished Lecturer Christos Papadimitriou

Christos Papadimitriou of UC Berkeley was the IFORS Distinguished Lecturer at the EURO Meeting yesterday (in the fuzzy picture, he is getting his award from IFORS President Elise del Rosario), and gave a very fine lecture on “Computing Equilibria” (and Sex, though that was not in the formal title). The starting point for his lecture was to note that the internet has greatly increased interest in multi-player games. Christos described the Internet as the first computational artifact that was never truly designed: it was formed by the selfish behavior of millions of agents. To quote Scott Shenker:

Christos Papadimitriou of UC Berkeley was the IFORS Distinguished Lecturer at the EURO Meeting yesterday (in the fuzzy picture, he is getting his award from IFORS President Elise del Rosario), and gave a very fine lecture on “Computing Equilibria” (and Sex, though that was not in the formal title). The starting point for his lecture was to note that the internet has greatly increased interest in multi-player games. Christos described the Internet as the first computational artifact that was never truly designed: it was formed by the selfish behavior of millions of agents. To quote Scott Shenker:

The Internet is an equilibrium, we just need to identify the game

But how do players in a game find an equilibrium? For simple zero-sum games, linear programming can find an equilibrium. For non-zero sum games, John Nash in 1950 proved an equilibrium exists, but his proof is nonconstructive (and is essentially equivalent to Brouwer’s fixed point theorem).

This issue of finding equilibria often comes up in coffee conversations with my colleagues. Economists love the beauty of their theorems, but I get frustrated when they claim what they do has real-world relevance when their actors have super-real capabilities. As Christos quoted:

if your laptop can’t find it, then neither can the market

But, they complain, it is really a bunch of different actors, so perhaps the quote should be “if a million laptops can’t find it, then neither can the market”, but that doesn’t affect things very much. Given the lack of computational methods for finding equilibrium in any but the simplest games and markets, it seems reasonable to worry about the advisability of relying on the assumption that people in real markets can magically find equilibria. In fact, Christos has a theorem on price adjustment mechanisms that shows that sometimes these cannot clear markets in anything less than exponential time.

After the romp through computational methods for finding equlibria, Christos moved on to Sex (that should increase my google visibility!). Why do creatures have sex? What is the advantage? Biologists have looked at this problem but don’t have a really satisfactory solution to it. Christos was motivated by his experience with computational methods for optimization:

Why do Simulated Annealing algorithms work, while Genetic Algorithms don’t?

Audible gasps came from the sizable group of GA researchers in the audience. I’ll remind readers this is Christos’ view not mine (though I certainly believe that anyone doing work on GAs for the traveling salesman problem, as an example, who believes they are helping solve TSPs is misguided, but I have seen applications where GAs seem the right approach).

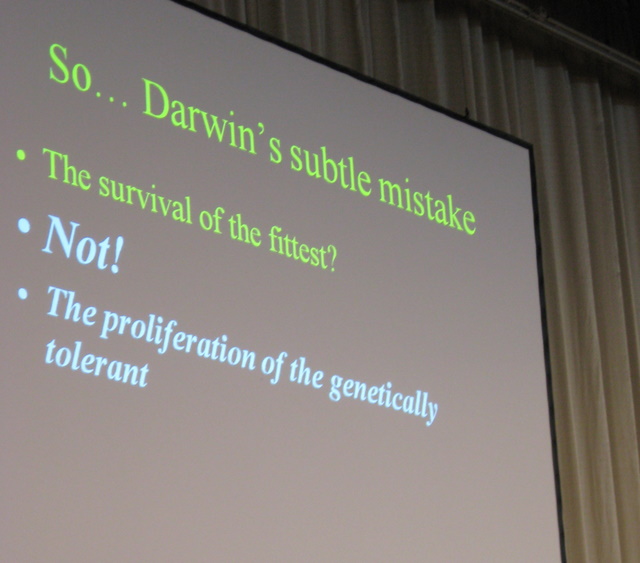

Christos’ fundamental point is that perhaps selection under recombination does not maximize fitness. Instead, it favors “mixability” (or genetic tolerance). And this mixability accelerates speciation, and accelerates evolution. There is a paper in the Proceedings of the National Academy of Science that explains this all in more detail.

Christos’ fundamental point is that perhaps selection under recombination does not maximize fitness. Instead, it favors “mixability” (or genetic tolerance). And this mixability accelerates speciation, and accelerates evolution. There is a paper in the Proceedings of the National Academy of Science that explains this all in more detail.

Christos’ home page has lots of the papers that underlie the talk (see, particularly, the “computing equilibria” and “biology” subsections). Highly recommended!

EURO Gold Medal

I am in Bonn, Germany for the EURO Conference. Tons of people here (2200+) but the organizers seem to be coping very well. Last night was a nice reception in a beer garden nearby. It has been a long time since I was at a conference with unlimited free beers. This morning was a little … slow.

The opening plenary session today included the announcement and talks of this year’s EURO Gold Medal winners: Jacques Benders, Eindhoven, and Frank Kelly, University of Cambridge. I missed most of the session (thanks, Graham Rand, for letting me know the winners), and I am really kicking myself for missing Benders’ talk: his work has a huge influence on my own current research. Benders’ Decomposition is one of the fundamental tools of operations research. Recently, people like John Hooker have breathed new life into the approach by introducing logical Benders’ decomposition (or combinatorial Benders decomposition).

Benders’ decomposition works as follows: take an optimization problem with two types of variables, x and y. First, solve the problem using only constraints that include just the x variables (this is the master problem). Give a solution x* to the master problem, now solve for the optimal y (the subproblem). We now have a candidate solution (x*,y*). The key step is to now generate a constraint on the x variables that says: “If you want a better x, it has to satisfy this constraint”. In classical Benders decomposition, that constraint is formed from the dual solution to the subproblem. In logical Benders, techniques such as constraint programming can be used to identify appropriate constraints. This iterates until the master problem (with the additional constraints) shows there is no better x than the ones already generated.

This approach can be radically faster than solving the overall (x,y) problem as one monolithic optimization. As the introduction to a reprinting of Benders’ original work says:

The real importance of the introduced algorithm is more evident today than ever before. It has had a profound seminal influence on the development of new generation of algorithms that enable us to solve ever larger and more complex from a wide spectrum.

I did get to the last half of Kelly’s talk. This was a very nice talk on modeling network routing, including issues of fairness. This happens a lot in internet routing of course, where routers try to figure out where things should go, and try to let everything through (eventually at least!). It would be rather annoying if one line through a router had a permanent “stop sign” while other traffic was sent through. Frank is famous enough to have a Wikipedia page.

I did get to the last half of Kelly’s talk. This was a very nice talk on modeling network routing, including issues of fairness. This happens a lot in internet routing of course, where routers try to figure out where things should go, and try to let everything through (eventually at least!). It would be rather annoying if one line through a router had a permanent “stop sign” while other traffic was sent through. Frank is famous enough to have a Wikipedia page.

Congrats to both Jacques and Frank, and no more missing plenary sessions for me!