I just got back from Venice, where I attended a conference on Algorithmic Decision Theory. This is a new conference series (optimistically numbered the first conference, implying at least a second) revolving around issues in uncertainty in decision making, preference solicitation, learning and other issues. From the conference web page:

A new unique event aiming to put together researchers and practitioners coming from different fields such as Decision Theory, Discrete Mathematics, Theoretical Computer Science and Artificial Intelligence in order to improve decision support in the presence of massive data bases, combinatorial structures, partial and/or uncertain information and distributed, possibly inter-operating decision makers. Such problems arise in several real-world decision making problems such as humanitarian logistics, epidemiology, risk assessment and management, e-government, electronic commerce, and the implementation of recommender systems.

This area has been very active, particularly in computer science where there are a host of applications.

I was asked to give one of the invited talks, and spoke on “An Operations Research Look at Voting”. I was a little worried about giving this talk, since my main qualifications come from papers published twenty years ago. When I was a doctoral student at Georgia Tech, I worked with John Bartholdi and Craig Tovey on computational issues in voting. Being the first to look at those issues, we got to prove some of the basic results in the area. These include

- For some natural voting rules, it is NP-hard to determine who the winner is.

- For some natural voting rules, it is NP-hard to determine how to manipulate the rule (where manipulation means misrepresenting your preferences so as to get a preferred outcome).

- For some natural voting rules, optimally using the powers of a chair to form subcommittees or otherwise manipulate the voting process is NP-hard.

We published this work in Social Choice and Welfare (after getting it rejected from more mainstream economics journals) where … it was soundly ignored for 15 years. No one referred to the papers; no one followed up on the papers; no one cared about the papers at all!

This work was my job talk in 1987/88, and it got me a wonderful job here at the Tepper School (then GSIA), Carnegie Mellon. And, without Google Scholar, it was not obvious that the papers were being ignored, so they added to my vita, and made it a bit easier to pass through the various steps.

But I did like the work a lot, and regretted (and still regret) that my economist colleagues did not take computational limits more seriously in their models.

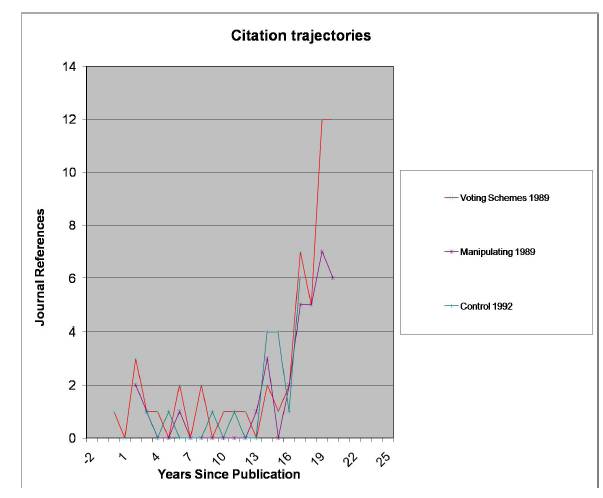

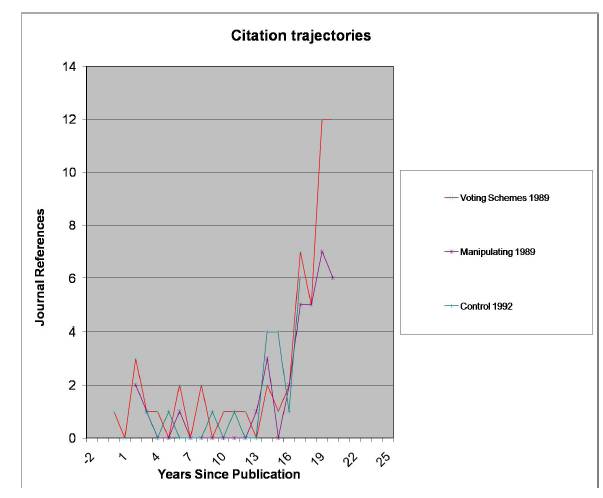

But then something amazing happened about 5 years ago: people started referring to the papers! The references were mainly in computer science, but at least the papers were being recognized. The counts of these papers in the Web of Science (formerly Science Citation Index) are particularly striking. In the associated graph, the x-axis is years since publication; the y-axis is the number of references in Web of Science in that year (Google scholar numbers are higher of course, but there is a bias towards more recent papers there). In my talk, I compare that graph to my “normal” papers, which reach a peak after 4 or 5 years then decrease. It is really gratifying to see the interest in these papers along with all the really cool new results people are getting.

But then something amazing happened about 5 years ago: people started referring to the papers! The references were mainly in computer science, but at least the papers were being recognized. The counts of these papers in the Web of Science (formerly Science Citation Index) are particularly striking. In the associated graph, the x-axis is years since publication; the y-axis is the number of references in Web of Science in that year (Google scholar numbers are higher of course, but there is a bias towards more recent papers there). In my talk, I compare that graph to my “normal” papers, which reach a peak after 4 or 5 years then decrease. It is really gratifying to see the interest in these papers along with all the really cool new results people are getting.

I closed off the talk with some work I have done recently on creating voting trees. Suppose there are three candidates, “a”, “b”, and “c”, and you really like candidate “a”. Many voting systems proceed as a series of comparisons between two alternatives (think of standard parliamentary procedure). If you are the chairperson, you might try to bring the candidates forward so as to increase the chances of “a” winning. In fact, if you set the agenda to be “b” against “c” and the winner against “a”, then “a” will win as long as “a” beats someone (and no one beats everyone). In this problem, the goal is to do the manipulation without knowing other voters’ preferences.

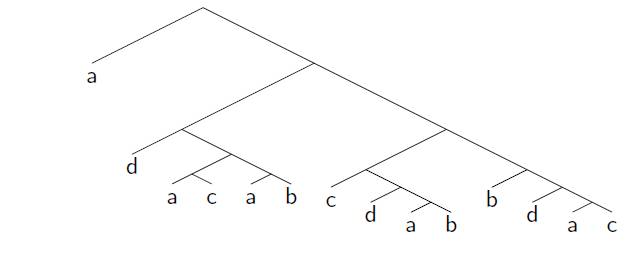

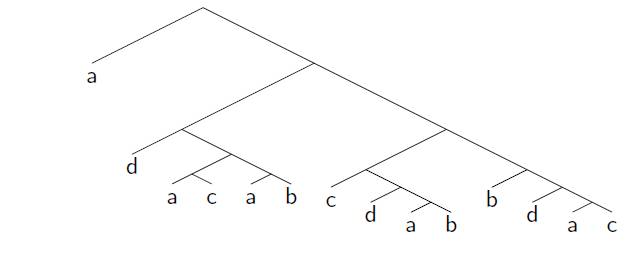

Can you do this for four candidates? If you want “a” to win, “a” must be in the top cycle: the group of candidates (perhaps the entire set of candidates) who all beat all the other candidates. The “Condorcet winner” is the minimal top cycle: if some candidate beats all the other candidates one-on-one, then that candidate must win any voting tree it occurs in. So, assuming “a” is in the top cycle, can you create a voting tree so that “a” wins with four candidates? The answer is yes, but it is a bit complicated: first “a” goes against “c” with the winner against “d” then the winner against “b” who plays the winner of (“a” goes against “b” with the winner against “d” …) …. actually, the minimum tree has 14 leaves! I am biased, but I think the tree is beautiful, but it goes to show how hard it is to manipulate agendas without knowledge of others’ preferences. I am in the process of generating the trees on 4 candidates: there is a very natural rule (“Copeland winner with Copeland loser tie break”: see the presentation for definitions) that requires more than 32 leaves (if an implementation tree for it exists).

Can you do this for four candidates? If you want “a” to win, “a” must be in the top cycle: the group of candidates (perhaps the entire set of candidates) who all beat all the other candidates. The “Condorcet winner” is the minimal top cycle: if some candidate beats all the other candidates one-on-one, then that candidate must win any voting tree it occurs in. So, assuming “a” is in the top cycle, can you create a voting tree so that “a” wins with four candidates? The answer is yes, but it is a bit complicated: first “a” goes against “c” with the winner against “d” then the winner against “b” who plays the winner of (“a” goes against “b” with the winner against “d” …) …. actually, the minimum tree has 14 leaves! I am biased, but I think the tree is beautiful, but it goes to show how hard it is to manipulate agendas without knowledge of others’ preferences. I am in the process of generating the trees on 4 candidates: there is a very natural rule (“Copeland winner with Copeland loser tie break”: see the presentation for definitions) that requires more than 32 leaves (if an implementation tree for it exists).

Sanjay Srivastava and I made a conjecture almost 15 years ago that would imply that this sort of manipulation would be possible no matter how many candidates. Little progress has been made but I think it is still a great problem (the economists know this as implementation by backwards induction and characterizing rules implementable on trees is an important problem in social choice/mechanism design).

If you want more details on all this, here are my slides. The references are

- Small Binary Voting Trees M.A. Trick, Small Binary Voting Trees, First International Workshop on Computational Social Choice,

Amsterdam, Netherlands, 500-511 (2006).

- Sophisticated Voting Rules S. Srivastava and M.A. Trick, Sophisticated voting rules: The two tournament case, Social Choice and

Welfare, 13: 275-289 (1996).

- How hard is it to control an election?, with C. A. Tovey and M. A. Trick. A slightly revised version of this appeared in Mathl. Comput. Modelling (Special Issue on Formal Theories of Politics) 16(8/9):27-40 (1992).

- The computational difficulty of manipulating an election, J.J. Bartholdi, C. A. Tovey and M. A. Trick (1989); Social Choice and Welfare 6:227-241 (1989).

- Voting schemes for which it can be difficult to tell who won the election, J.J. Bartholdi, C. A. Tovey and M. A. Trick; Social Choice and Welfare 6:157-165 (1989).

Oh, and Venice is a beautiful place to visit. But you might have heard that elsewhere.

Tom Magnanti has been appointed President of the Singapore University of Technology and Design, Singapore’s so-called “fourth university”. Tom, of course, is one of the preeminent researchers and administrators in operations research. He has written lots of influential papers and books. His book with Bradly and Hax on Applied Mathematical Programming had a huge effect on me (take that Russ Ackoff) and is the sort of book the field badly needs now.

Tom Magnanti has been appointed President of the Singapore University of Technology and Design, Singapore’s so-called “fourth university”. Tom, of course, is one of the preeminent researchers and administrators in operations research. He has written lots of influential papers and books. His book with Bradly and Hax on Applied Mathematical Programming had a huge effect on me (take that Russ Ackoff) and is the sort of book the field badly needs now. Russell Lincoln Ackoff

Russell Lincoln Ackoff  But then something amazing happened about 5 years ago: people started referring to the papers! The references were mainly in computer science, but at least the papers were being recognized. The counts of these papers in the

But then something amazing happened about 5 years ago: people started referring to the papers! The references were mainly in computer science, but at least the papers were being recognized. The counts of these papers in the Can you do this for four candidates? If you want “a” to win, “a” must be in the top cycle: the group of candidates (perhaps the entire set of candidates) who all beat all the other candidates. The “Condorcet winner” is the minimal top cycle: if some candidate beats all the other candidates one-on-one, then that candidate must win any voting tree it occurs in. So, assuming “a” is in the top cycle, can you create a voting tree so that “a” wins with four candidates? The answer is yes, but it is a bit complicated: first “a” goes against “c” with the winner against “d” then the winner against “b” who plays the winner of (“a” goes against “b” with the winner against “d” …) …. actually, the minimum tree has 14 leaves! I am biased, but I think the tree is beautiful, but it goes to show how hard it is to manipulate agendas without knowledge of others’ preferences. I am in the process of generating the trees on 4 candidates: there is a very natural rule (“Copeland winner with Copeland loser tie break”: see the presentation for definitions) that requires more than 32 leaves (if an implementation tree for it exists).

Can you do this for four candidates? If you want “a” to win, “a” must be in the top cycle: the group of candidates (perhaps the entire set of candidates) who all beat all the other candidates. The “Condorcet winner” is the minimal top cycle: if some candidate beats all the other candidates one-on-one, then that candidate must win any voting tree it occurs in. So, assuming “a” is in the top cycle, can you create a voting tree so that “a” wins with four candidates? The answer is yes, but it is a bit complicated: first “a” goes against “c” with the winner against “d” then the winner against “b” who plays the winner of (“a” goes against “b” with the winner against “d” …) …. actually, the minimum tree has 14 leaves! I am biased, but I think the tree is beautiful, but it goes to show how hard it is to manipulate agendas without knowledge of others’ preferences. I am in the process of generating the trees on 4 candidates: there is a very natural rule (“Copeland winner with Copeland loser tie break”: see the presentation for definitions) that requires more than 32 leaves (if an implementation tree for it exists).